This article is part of a larger series of articles titled “Azure Networks for Architects“.

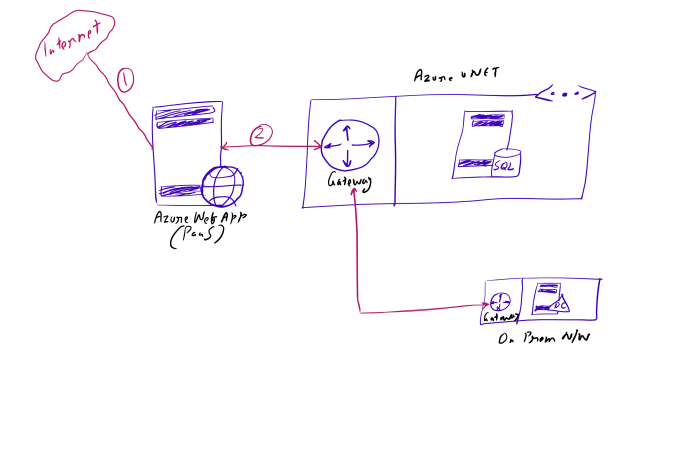

In this article, we will analyze the options available for building a DMZ or a perimeter network on Azure while using PaaS services like Azure Web App. Let’s start with a network design and see what’s wrong with it.

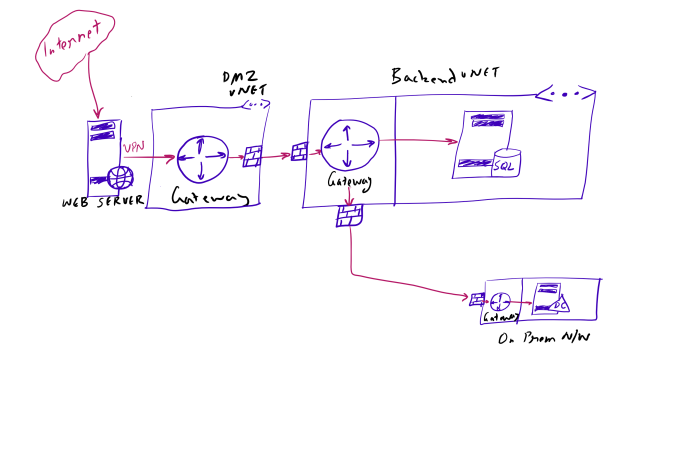

In above design, users access a typical website hosted on Azure Web App (marked 1) which in turn need to access a SQL Server VM hosted in an Azure vNet. Azure web app is connected to Azure vNet using P2S VPN (marked 2). The Azure vNet in turn is connected to on premise resources using VPN. As usual, there is a dedicated subnet for hosting VPN gateway on Azure vNet.

Above a typical scenario that is recommended on Azure (and many have implemented it) where a PaaS service like Azure Web App can access backend resources (like SQL DB) using vNet connectivity and even on premise resources (when vNet is further connected to on prem n/w via VPN).

However, this network design has a couple of security flaws which is unacceptable in many scenarios.

Flaw # 1: Unrestricted access to public facing resources

If you observe above design, traffic from internet to Azure Web App goes uninterrupted. Azure web app does not offer any mechanism where we can apply our custom security policies to incoming traffic. For example, what if we don’t want to expose Azure Web App to internet? We don’t even have an option to turn off public endpoints. Threats like DDOS attacks or IP spoofing go simply unchecked. Wait… someone can say that Microsoft writes that they do have security infrastructure in place for attacks like DOS. Well, read again. The security checks that Microsoft provides are for “their resources” and not “your resources”. This is a very common misconception to misread Microsoft’s own security as Microsoft providing security for customer’s endpoints! No, no one provides anything for free. Though Microsoft provides security but that is very basic. For example, Microsoft will monitor if any other tenant is trying to attack your system from within Azure and prevent it (but it doesn’t protect the attack originating from outside Azure). This is the statement that you need to look for “Windows Azure’s DDoS protection also benefits applications. However, it is still possible for applications to be targeted individually. As a result, customers should actively monitor their Windows Azure applications.“. See the term “also”. It means protecting customer’s apps is not its primary aim but yes, you will definitely et some basic protectuion if the underlying infrastructure is secured.

If you really want to use Microsoft services to prevent attacks, you need to pay for those services. Its called “Azure Security Center”. However, even Azure Security Centre doesn’t offer protection to Azure Web Apps unless you are in “Premium Plan with ASE”. In a nutshell, Microsoft has clearly written in its documentation (so don’t blame them) that they don’t provide protection for tenant’s endpoints. So, remember, you are very much prone to all kinds of attacks and only you… not Microsoft Azure.

Coming back to main point, the first reason why the above scenario is unacceptable is because “there is no control on inbound traffic for public facing resources“. Every organization wants to host a basic firewall (at minimum) that analyzes packets at different layers (especially higher ones) and allow/reject incoming traffic based on policies that your business demands and Azure web apps offer none. Such public facing resources become the weakest link or entry points for attacks into your overall system.

Flaw # 2: No/less cushion between public facing resource and backend resources

One of the important principle of security is called “defense in depth” or “castle” approach. This approach says that we need to increase the distance between the external world and protected resource and provide security by using multiple security controls at each layer. So, if your public facing web app is accessing a SQL Database directly, your SQL Database is at risk. This is also the reason why software design recommends using a “service layer” (not necessarily web service) where all access to backend resources happens via a dedicated set of services. However we don’t want to go into details of software design as it will dilute the topic so we’ll come back to networks.

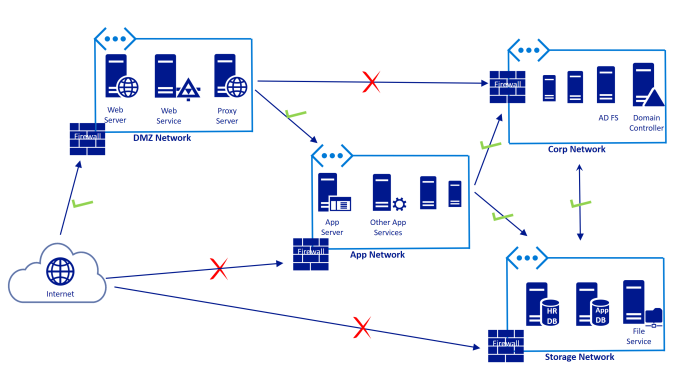

When security is top priority, organizations maintain complete isolation between internal resources and external world. Below is very high level network design that many organizations follow for hosting public facing resources irrespective of the cloud platform capabilities.

Here are the characteristics of above design.

- The public facing resources are within a dedicated network of their own with firewalls and other security devices facing internet. This means that we have control over all of the traffic flowing in/out of public facing resources. We even have the capability to remove all of public facing inbound traffic or allow selective traffic based on security policies that organization wants and we don’t even have to touch the web resource. These controls can be placed on the network itself.

- Protected resources are in their own networks (DBs, DCs etc.). Here you’ll see two such separate networks. Whether to use an entirely separate network or use subnets goes into the discussion of performance vs security and my previous post talks about it.

- Communication between resources of two networks happen via gateways of the networks. The resources themselves don’t connect directly. If we compare with previous approach, in the flawed design, Azure Web app connected with the SQL Database via Backend vNet’s Gateway. There was only one entity involved in between web app and database. However, now there are two layers (gateway and security policies of DMZ vNet and security policies and gateway of backend vNet). So we have essentially increased the distance between public facing resource and protected resource and we get to deploy extra layers of security controls in between them. So this is better design from “defense in depth” point of view.

In above design the two problems that I mentioned are being addressed (control over inbound traffic and defense in depth).

The “How” on Azure?

Now, the question that remains is how to achieve above level of isolation on Azure networks while using PaaS services like web apps? There are two ways to address this problem on Azure. The first approach involves extra cost while other approach involves extra configuration (and a little bit of extra cost too).

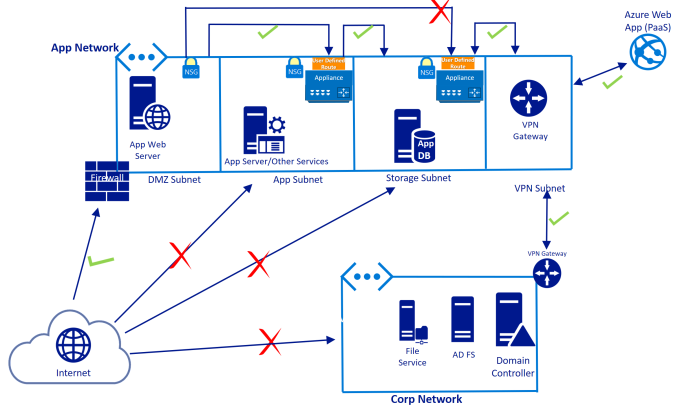

Approach 1: Using Azure App Service Premium Plan

Azure app service offers different plans/editions. The highest of these is “Premium” plan. The premium plan can be used with two options:

- using App Service Environment (ASE)

- without using ASE

When we use ASE, the web apps are deployed within an Azure Network. This could be either your own existing n/w or you can create a new dedicated n/w. The app service servers “actually” become part of azure network (without using indirect mechanisms like P2S VPN). The main benefit of this approach vs using VPN is the fact that you can now use all the network security concepts of Azure vNets that you are familiar with (NSG, NSA, UDR…). So you can control the inbound traffic at fine grained level. You can perform deep packet inspection using advanced security appliances connected to your network.

When your app wants to connect to backend resources, you can connect the two networks without actually connecting your web app to backend n/w (you just need to connect the two networks via Gateways). However, this is a costly option just for implementing security and will increase the TCO of your solution. ASE will really be worth investing if you really need other ASE features too (like much larger scalability, bigger storage space etc.).

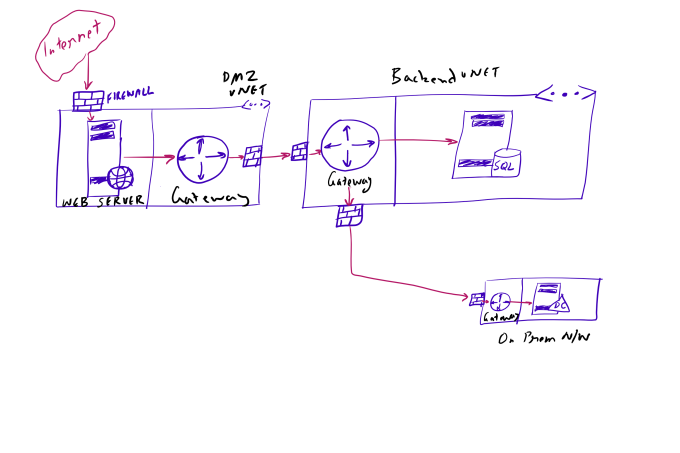

Approach 2: Using vNet to vNet connectivity

In this approach, instead of hosting web apps in ASE, we simply create a new dedicated network that is public facing and connect Azure Web App to this n/w via VPN as shown below. Notice that in comparison to previous design, the only difference is that the web app connects to DMZ vNet via VPN (and not actually part of it). But this small change makes a BIG difference. In this context, VPN is not same as actually being part of network because this approach still doesn’t provide the ability to control public facing inbound traffic. The web app still connects to internet without any checks and bounds (well, at least, not the ones that we wanted).

However, this design definitely solves the problem of defense in depth. Maybe in future Microsoft may provide capability where all web apps will be placed within a n/w (networks don’t cost us anything) or maybe provide extra capability to filter public inbound traffic.

Till then only ASE offers the uber features that an enterprise grade solution needs. Any non ASE based solution on Azure web app is not completely secure, at least in the books.

I hope you enjoyed this article. In the next article, we’ll take a look at other aspects of network designs.

This series is also mirrored at blogs.comtecinfo.com. The links in this article below will be updated as and when new articles are posted.

- Next Article (Not published yet)

- Previous Article

- Index/Main Page/Start of Series

-Rahul Gangwar

https://www.linkedin.com/in/gangwar

- Stay Tuned! Subscribe!

- Send Kudos!

- Report Error/Incorrect Info